I’ve recently been asked by a client whether there was a way to digitally sign documents with digital signatures that cannot be modified and therefore proves that a signed document is signed by an individual. In addition to this, they would also like to allow more signatures to be added to it because the document is essentially an invoice that requires 2 signatures of approval and a signature from a person in accounting to verify that it has been entered into the account system.

The client already uses Adobe Acrobat Professional for creating PDF documents and they noticed signature features from within the GUI but wasn’t sure how to use it so they asked me to look into it. I’m in no way an Adobe Acrobat expert (definitely not my forte) as I don’t use it so I did a bit of research on the internet but while it looks like it can be done, there isn’t a clear document from Adobe that demonstrates how to do it. Furthermore, Adobe appears to promote the EchoSign service which the client didn’t want to use as they didn’t want any additional cost.

Knowing that Adobe Acrobat allows certificate signing, I took a bit of time sitting down at a workstation with Adobe Acrobat Professional to play around with the settings and figured out a way to do it with Microsoft Certificate Authority issued certificates. My guess is that a lot of others would probably need a quick and cheap solution as this so I thought I’d blog the process.

Step #1 – Create a new Certificate Template for Digital Signatures

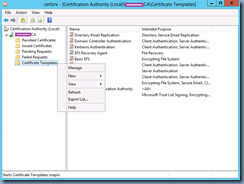

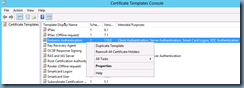

Begin by launching the Active Directory Certificate Services console and opening up the templates section, right click on the Code Signing template and select Duplicate Template:

In the General tab, give the Template display name and Template name a meaningful name (I called it Adobe Signature), adjust the Validity period to more than 1 year if desired and check the Publish certificate in Active Directory checkbox:

Navigate to the Request Handling tab and change the Purpose field to Signature and encryption, check the Allow private key to be exported checkbox:

Navigate to the Subject Name tab and if desired, you can change the option to Supply in the request if you want to allow the enroller (the user requesting a certificate signature) to fill out the fields for the certificate or leave it as the default Build from this Active Directory information with Subject name format as Fully distinguished name and User principal name (UPN) checkbox checked. I actually prefer to leave the setting as the default Build from this Active Directory information because the issued certificates will always be consistent with what fields are filled out and it’s also easier for the enroller to request the certificate:

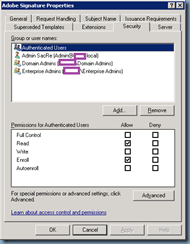

Navigate to the Security tab, select Authenticated Users and check the Allow – Enroll checkbox:

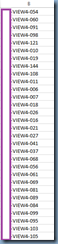

Step #2 – Publish the new Certificate Template

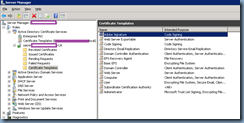

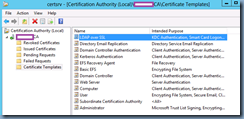

With the new certificate created, navigate to the Certificate Template node in the Certificate Authority console, right click, select New and click on Certificate Template to Issue:

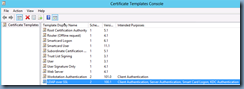

Notice that the new Adobe Signature template is listed:

Step #3 – Request a new certificate for the user

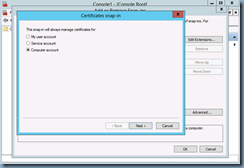

With the new certificate template created and published, go to the workstation of a user who needs a digital certificate for signing Adobe Acrobat PDFs, open the MMC and add the Current User store for Certificates. From within the Certificates – Current User console, navigate to Personal –> Certificates, right click in the right empty window, select All Tasks –> Request New Certificate..:

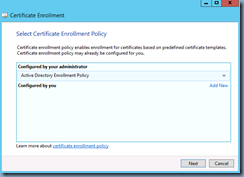

Proceed through the wizard:

Select Adobe Signature as the certificate:

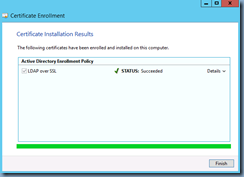

Complete the enrollment:

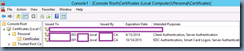

You should now have a signature issued by the Active Directory integrated Microsoft Certificate Authority:

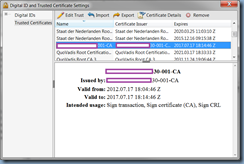

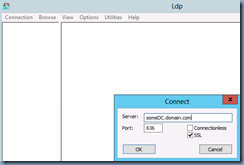

Step #4 – Import Microsoft Certificate Authority Root Certificate into Adobe Acrobat Professional Trusted CAs

What I noticed with Adobe Acrobat Professional is that it does not appear to use the local workstation’s trusted store for Certificate Authorities. This means that even if a certificate is issued by a Microsoft Active Directory integrated Root CA and it is listed in the Trusted Root Certification Authorities, Adobe would not automatically trust it. So prior to starting to use the certificate enrolled via step 3, we will need to go to every desktop that will be involved with this signing process to manually import the CA. I wished there was an easier way to do this and maybe there is but a brief Google did not reveal a GPO adm available for me to import CAs into Adobe Acrobat Professional (I will update this post if I figure out a way).

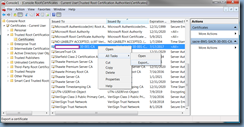

Navigate to the Trusted Root Certification Authorities folder in the MMC and right click on the root CA certificate in the store then choose All Tasks –> Export…:

Proceed through the wizard to export the root CA’s certificate:

Open Adobe Acrobat Professional:

Click on the Edit tab and select Preferences…:

Navigate to the Signatures category and click on the More button beside Identities & Trusted Certificates:

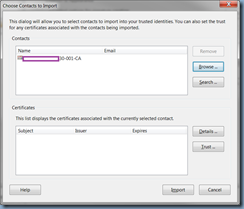

Select Trusted Certificates on the left windows and click on Import:

Click on the Browse button:

Select the exported root CA certificate:

Click on the Import button:

A confirmation window will be displayed indicating the certificate has been imported:

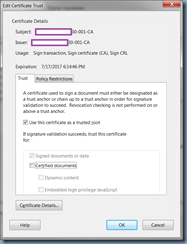

Notice that the certificate is now imported. Before you proceed, select the certificate and click on Certificate Details:

Check the Use this certificate as a trusted root checkbox. Make sure this step is completed or even though the certificate is imported, Adobe will not trusted it and will display the signatures as signed by an unknown source:

Step #5 – Signing PDFs with certificate signatures

From there, there are 2 options to allow users to sign PDF documents:

- Have them select a certificate already in their local desktop’s Certificate store

- Have them sign it with a PFX file (an exported certificate in a flat file)

#1 is convenient in the sense that they just select the certificate during signing and a password is not required. This would be good for users who don’t roam around desktops.

#2 is good for users who may be signing documents from different workstations and the flat file PFX would be easy for them to move around or access via a network share. Note that the PFX is password protected.

I will demonstrate what both look like:

Have them select a certificate already in their local desktop’s Certificate store:

To have them sign a PDF with a certificate in their local desktop’s store requires no further action. All they need to do is open up a document in Adobe Acrobat Pro:

Click on the Sign button on the top right corner then select Place Signature:

Click on the Drag New Signature Rectangle button:

Use the lasso to lasso an area where the signature is supposed to be:

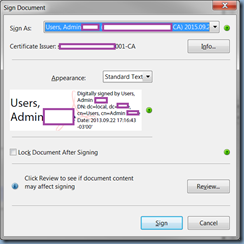

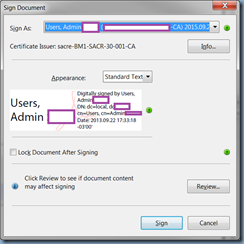

Assuming there’s just 1 certificate available, the user’s certificate should already be selected in the Sign As field but if not, select it then click on the Sign button:

Save the document:

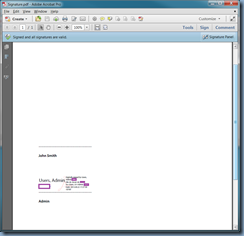

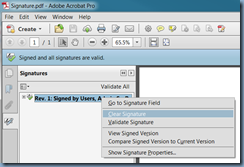

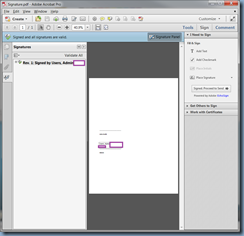

Note the signature and the Signed and all signatures are valid. note at the top:

Clicking on the Signature Panel button will show the signatures applied to the document:

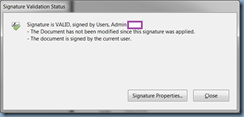

Right clicking on the signature will allow you to review the signature properties by clicking on Validate Signature:

Note that if Clear Signature is selected, the signature will be marked as cleared but the line item will not be deleted because this allows a full history of what’s been done with the signatures.

Have them sign it with a PFX file (an exported certificate in a flat file):

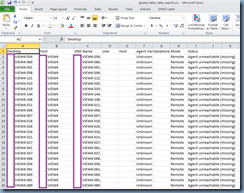

To sign with a PFX, we will need to export the issued certificate first similar to the way we did with the root CA certificate. Navigate to the Personal –> Certificates folder in the MMC and right click on the issued certificate in the store then choose All Tasks –> Export…:

Proceed through the wizard to export the certificate:

Ensure the Yes, export the private key is selected:

Enter a password:

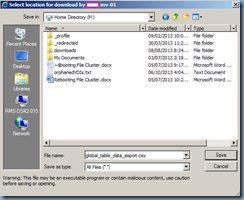

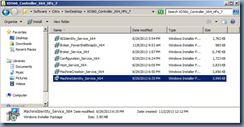

Select a path:

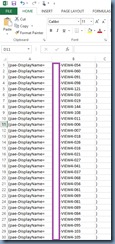

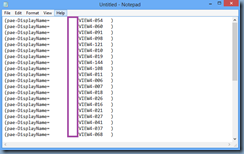

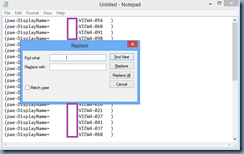

With the certificate exported as PFX, proceed by signing PDF documents by opening up a document in Adobe Acrobat Pro:

Click on the Drag New Signature Rectangle button:

Use the lasso to lasso an area where the signature is supposed to be:

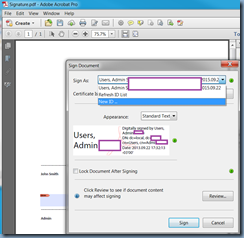

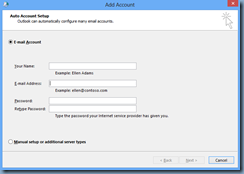

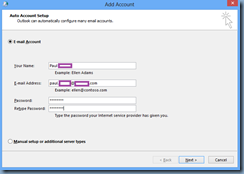

In the Sign As drop down menu, select New ID…:

Select My existing digital ID from: and A file:

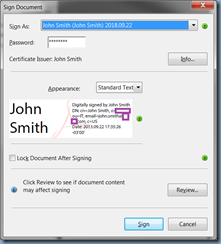

Browse to the exported PFX file, enter the password:

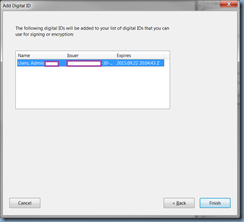

Review the properties of the certificate and click Finish:

Proceed by clicking the Sign button:

Save the document:

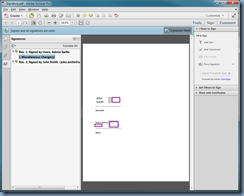

Note the signature and the Signed and all signatures are valid. note at the top:

Clicking on the Signature Panel button will show the signatures applied to the document:

From here, you can continue to apply other user’s signatures to it as shown here:

Note the second signature that’s listed as Rev. 2:

This may seem like a simple task to Adobe Acrobat Pro experts but for someone like me who don’t use the application, finding information on how signature works took a bit of time so I hope this helps anyone out there who may find themselves in the same situation as I did.

![clip_image001[4] clip_image001[4]](http://lh4.ggpht.com/-uywRZy50cxI/Uj90LfH7DqI/AAAAAAAAeWU/oU9gGHZUGiM/clip_image001%25255B4%25255D_thumb.png?imgmax=800)

![clip_image001[6] clip_image001[6]](http://lh3.ggpht.com/-_SDK4aAeotA/Uj90M5lcZgI/AAAAAAAAeWk/U3i33cmYLsc/clip_image001%25255B6%25255D_thumb.png?imgmax=800)

![clip_image001[8] clip_image001[8]](http://lh3.ggpht.com/-w4xI7O7MbKY/Uj90Sk-taQI/AAAAAAAAeXk/Kkst6_nEXWU/clip_image001%25255B8%25255D_thumb.png?imgmax=800)

![clip_image001[10] clip_image001[10]](http://lh3.ggpht.com/-XD-xHrmHFCI/Uj90UTgWvdI/AAAAAAAAeX0/Pg7jbZcVXWU/clip_image001%25255B10%25255D_thumb.png?imgmax=800)

![clip_image001[12] clip_image001[12]](http://lh3.ggpht.com/-q1MMERwRfBg/Uj90VlsZ4DI/AAAAAAAAeYE/UzwmGDumGNw/clip_image001%25255B12%25255D_thumb.png?imgmax=800)

![clip_image001[14] clip_image001[14]](http://lh4.ggpht.com/-RAm9OT1QmUM/Uj90XQ7reOI/AAAAAAAAeYU/jjnbJ1ExqcE/clip_image001%25255B14%25255D_thumb.png?imgmax=800)

![clip_image001[16] clip_image001[16]](http://lh5.ggpht.com/-y1iSiaGqS2g/Uj90Y8KW6iI/AAAAAAAAeYk/skiECidh41c/clip_image001%25255B16%25255D_thumb.png?imgmax=800)

![clip_image001[18] clip_image001[18]](http://lh4.ggpht.com/--xep4wZi-c8/Uj90dwKVMHI/AAAAAAAAeZU/WO70k_rPVx4/clip_image001%25255B18%25255D_thumb.png?imgmax=800)

![clip_image001[20] clip_image001[20]](http://lh3.ggpht.com/-pAoLdtI6x_0/Uj90fdEWS6I/AAAAAAAAeZk/bBxlP2Xr_4k/clip_image001%25255B20%25255D_thumb.png?imgmax=800)

![clip_image001[22] clip_image001[22]](http://lh4.ggpht.com/-HB330EN0cpg/Uj90ghlefaI/AAAAAAAAeZ0/0vUfEQ4TGdM/clip_image001%25255B22%25255D_thumb.png?imgmax=800)

![clip_image001[24] clip_image001[24]](http://lh3.ggpht.com/-44jk822bztk/Uj90ibx2UTI/AAAAAAAAeaE/FOONoJD15z4/clip_image001%25255B24%25255D_thumb.png?imgmax=800)

![clip_image001[26] clip_image001[26]](http://lh3.ggpht.com/-fNtozXdzMMQ/Uj90kGITUaI/AAAAAAAAeaU/WdTu1q6HwZM/clip_image001%25255B26%25255D_thumb.png?imgmax=800)

![clip_image001[28] clip_image001[28]](http://lh4.ggpht.com/-K0re4kaHu2I/Uj90lazPtOI/AAAAAAAAeak/pb-xhOjUh4w/clip_image001%25255B28%25255D_thumb.png?imgmax=800)

![clip_image001[30] clip_image001[30]](http://lh4.ggpht.com/-K7FDhr3TzNY/Uj90m7l54UI/AAAAAAAAea0/oUTjevzUvWU/clip_image001%25255B30%25255D_thumb.png?imgmax=800)

![clip_image001[32] clip_image001[32]](http://lh6.ggpht.com/-JNOB6NMKUV4/Uj90oc751nI/AAAAAAAAebE/dITwPG5duI4/clip_image001%25255B32%25255D_thumb.png?imgmax=800)

![clip_image001[34] clip_image001[34]](http://lh3.ggpht.com/-rLETUItnLok/Uj90qSeuV4I/AAAAAAAAebU/pDUszAJNbuM/clip_image001%25255B34%25255D_thumb.png?imgmax=800)

![clip_image001[36] clip_image001[36]](http://lh3.ggpht.com/-Q2nPuhcGT6c/Uj90ssXFbVI/AAAAAAAAebk/35DYDsbExjE/clip_image001%25255B36%25255D_thumb.png?imgmax=800)

![clip_image001[38] clip_image001[38]](http://lh6.ggpht.com/-W56IGsC6j_c/Uj90ut90Y7I/AAAAAAAAeb0/IurgT9h2Ars/clip_image001%25255B38%25255D_thumb.png?imgmax=800)

![clip_image001[40] clip_image001[40]](http://lh4.ggpht.com/-THYYbcIqHLU/Uj90xo0aSbI/AAAAAAAAecY/ZErKkaOF_p8/clip_image001%25255B40%25255D_thumb.png?imgmax=800)

![clip_image001[42] clip_image001[42]](http://lh6.ggpht.com/-sUXowVmGNdo/Uj902eqFXPI/AAAAAAAAedI/FQYalihsA7I/clip_image001%25255B42%25255D_thumb.png?imgmax=800)

![clip_image001[44] clip_image001[44]](http://lh5.ggpht.com/-edbfalz5d_4/Uj903jgHMhI/AAAAAAAAedY/NMebgqoep3w/clip_image001%25255B44%25255D_thumb.png?imgmax=800)

![clip_image001[46] clip_image001[46]](http://lh3.ggpht.com/-T3IEfkFbBBU/Uj9041BsTsI/AAAAAAAAedo/L_RmcCu_6hE/clip_image001%25255B46%25255D_thumb.png?imgmax=800)

![clip_image001[48] clip_image001[48]](http://lh6.ggpht.com/-oOCrGiF2CwY/Uj9067gPvfI/AAAAAAAAed4/efMALjTjVd0/clip_image001%25255B48%25255D_thumb.png?imgmax=800)

![clip_image001[52] clip_image001[52]](http://lh4.ggpht.com/-oz09QcPH-v0/Uj9097NFdRI/AAAAAAAAeeY/eQLVg_4szjo/clip_image001%25255B52%25255D_thumb.png?imgmax=800)

![clip_image001[54] clip_image001[54]](http://lh6.ggpht.com/-FlZ8biCwCDM/Uj91IaTZhzI/AAAAAAAAegI/6g1JAeaO5xk/clip_image001%25255B54%25255D_thumb.png?imgmax=800)

![clip_image001[56] clip_image001[56]](http://lh6.ggpht.com/-br9yvc2GVKo/Uj91J-Rv81I/AAAAAAAAegY/CnTzAr-oLOM/clip_image001%25255B56%25255D_thumb.png?imgmax=800)

![clip_image001[58] clip_image001[58]](http://lh4.ggpht.com/-0fJs_ip6dA0/Uj91LhXiH2I/AAAAAAAAeg4/7SwPtJhNPSs/clip_image001%25255B58%25255D_thumb.png?imgmax=800)

![clip_image001[60] clip_image001[60]](http://lh6.ggpht.com/-knlURoGOcGQ/Uj91NcNlcrI/AAAAAAAAehM/H52w0GZ4CpY/clip_image001%25255B60%25255D_thumb.png?imgmax=800)

![clip_image001[62] clip_image001[62]](http://lh4.ggpht.com/-hkRxiAD03Zg/Uj91OuoaB6I/AAAAAAAAehc/3hwTnS_p7lM/clip_image001%25255B62%25255D_thumb.png?imgmax=800)

![clip_image001[64] clip_image001[64]](http://lh4.ggpht.com/-vurWZ_8FyNE/Uj91Q-m5edI/AAAAAAAAehs/5xLiuOA3WuE/clip_image001%25255B64%25255D_thumb.png?imgmax=800)

![clip_image001[66] clip_image001[66]](http://lh4.ggpht.com/-ClK1nMWNfWw/Uj91SVyzd5I/AAAAAAAAeiA/-JAe12MIZ9Q/clip_image001%25255B66%25255D_thumb.png?imgmax=800)

![clip_image001[68] clip_image001[68]](http://lh3.ggpht.com/-NtooKxQ_Ukc/Uj91TuvBzgI/AAAAAAAAeiQ/-rVL8zfflN0/clip_image001%25255B68%25255D_thumb.png?imgmax=800)

![clip_image001[70] clip_image001[70]](http://lh5.ggpht.com/-70sdWMi9xdQ/Uj91VC4xRJI/AAAAAAAAeig/deQ-JsOe-fI/clip_image001%25255B70%25255D_thumb.png?imgmax=800)

![clip_image001[72] clip_image001[72]](http://lh6.ggpht.com/-bXvaaUbyD4s/Uj91X7CLoAI/AAAAAAAAejA/6rlGqpuMO18/clip_image001%25255B72%25255D_thumb.png?imgmax=800)

![clip_image001[74] clip_image001[74]](http://lh4.ggpht.com/-LDL2WO8seZE/Uj91Ztpq4SI/AAAAAAAAejQ/qLnN2QONRHo/clip_image001%25255B74%25255D_thumb.png?imgmax=800)

![clip_image001[76] clip_image001[76]](http://lh4.ggpht.com/-U35bRfZXK4U/Uj91exvFq0I/AAAAAAAAekA/yjrVOD41Ljs/clip_image001%25255B76%25255D_thumb.png?imgmax=800)

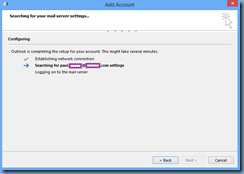

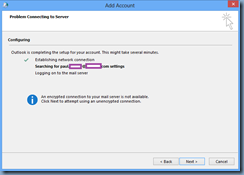

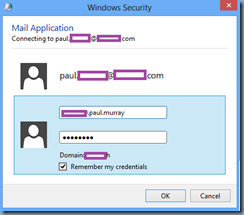

![clip_image001[4] clip_image001[4]](http://lh6.ggpht.com/-CKTrX0WMhxw/Uk9rfETKJaI/AAAAAAAAe1c/k8z6QmeIDLQ/clip_image001%25255B4%25255D_thumb.png?imgmax=800)

![clip_image001[6] clip_image001[6]](http://lh6.ggpht.com/-iMMw3E_gkeQ/Uk9rgXz5MTI/AAAAAAAAe1s/5fQ5ZXU0usM/clip_image001%25255B6%25255D_thumb.png?imgmax=800)

![clip_image001[8] clip_image001[8]](http://lh4.ggpht.com/-Bk01b-aAnjI/Uk9rh1jtNJI/AAAAAAAAe18/AsLZuVbitT4/clip_image001%25255B8%25255D_thumb.png?imgmax=800)

![clip_image001[12] clip_image001[12]](http://lh6.ggpht.com/-w1-8F_8OrJ4/Uk9rjKFG-BI/AAAAAAAAe2M/bzgDuAUHvFM/clip_image001%25255B12%25255D_thumb.png?imgmax=800)

![clip_image001[14] clip_image001[14]](http://lh4.ggpht.com/-9vECO7S1k4M/Uk9rksx2cNI/AAAAAAAAe2c/UGaqDSgg0Cs/clip_image001%25255B14%25255D_thumb.png?imgmax=800)

![clip_image001[16] clip_image001[16]](http://lh3.ggpht.com/-_OEQK2aCmRc/Uk9rlqfhO9I/AAAAAAAAe2s/FOvTKS8FwOY/clip_image001%25255B16%25255D_thumb.png?imgmax=800)

![clip_image001[18] clip_image001[18]](http://lh5.ggpht.com/-15kjWGJ2CLA/Uk9rmy_2VsI/AAAAAAAAe28/KlYuk4FBQWo/clip_image001%25255B18%25255D_thumb.png?imgmax=800)

![clip_image001[20] clip_image001[20]](http://lh5.ggpht.com/-dLyF0dAL8vk/Uk9roMJd5FI/AAAAAAAAe3M/uHvZr2NCels/clip_image001%25255B20%25255D_thumb.png?imgmax=800)

![clip_image001[22] clip_image001[22]](http://lh3.ggpht.com/-WlEaFew1vio/Uk9rpYDWxAI/AAAAAAAAe3c/i16DqEleTQQ/clip_image001%25255B22%25255D_thumb.png?imgmax=800)

![clip_image001[24] clip_image001[24]](http://lh3.ggpht.com/-Nx89nQat5EY/Uk9rq_cM4VI/AAAAAAAAe3s/4JVWZ-UJDFM/clip_image001%25255B24%25255D_thumb.png?imgmax=800)

![clip_image001[4] clip_image001[4]](http://lh6.ggpht.com/-Iu36w8pS5ao/Uk9_aHXmXKI/AAAAAAAAe4k/aAb2UhF6rHw/clip_image0014_thumb.png?imgmax=800)

![clip_image001[6] clip_image001[6]](http://lh3.ggpht.com/-8jQ_i3G_PkY/Uk9_bbkStGI/AAAAAAAAe40/lvy8is3z2kM/clip_image0016_thumb.png?imgmax=800)

![clip_image001[8] clip_image001[8]](http://lh6.ggpht.com/-ySyomP-fOlg/Uk9_cUhl0dI/AAAAAAAAe5E/SZcX7nkpmsc/clip_image0018_thumb.png?imgmax=800)

![clip_image001[14] clip_image001[14]](http://lh3.ggpht.com/-whOSDia5A14/Uk9_d2V8RNI/AAAAAAAAe5U/lBM-ziPKevk/clip_image00114_thumb.png?imgmax=800)

![clip_image001[10] clip_image001[10]](http://lh5.ggpht.com/-wUB17GhsKkI/Uk9_fcgYJnI/AAAAAAAAe5k/cljKokBfV6E/clip_image00110_thumb.png?imgmax=800)

![clip_image001[12] clip_image001[12]](http://lh6.ggpht.com/-Yxoi8zJW00Q/Uk9_gw4PdKI/AAAAAAAAe50/4ulWX1nuhpg/clip_image00112_thumb.png?imgmax=800)

![clip_image001[16] clip_image001[16]](http://lh4.ggpht.com/-Ly6e0_H1xxg/Uk9_ijRmw4I/AAAAAAAAe6A/iDm-ehfdYT4/clip_image00116_thumb.png?imgmax=800)

![clip_image001[18] clip_image001[18]](http://lh5.ggpht.com/-BGyth1eDm_o/Uk9_j9Kqv0I/AAAAAAAAe6M/gIVDEJAY_Y4/clip_image00118_thumb.png?imgmax=800)

![clip_image001[20] clip_image001[20]](http://lh6.ggpht.com/-JSWEcf9xmBI/Uk9_k6hnjxI/AAAAAAAAe6c/gFpwiWzZDZw/clip_image00120_thumb.png?imgmax=800)

![clip_image001[22] clip_image001[22]](http://lh6.ggpht.com/-coTuvAaBFD4/Uk9_mL2qH2I/AAAAAAAAe6o/93GJA-VUCUU/clip_image00122_thumb.png?imgmax=800)

![clip_image001[24] clip_image001[24]](http://lh4.ggpht.com/-Sfd1Vkdxowk/Uk9_nTPHYdI/AAAAAAAAe7E/yypW7X0Bp8E/clip_image00124_thumb.png?imgmax=800)

![clip_image001[26] clip_image001[26]](http://lh5.ggpht.com/-inAfppOBDqA/Uk9_oonLTGI/AAAAAAAAe7U/-Evb5HPwKOA/clip_image00126_thumb.png?imgmax=800)

![clip_image001[28] clip_image001[28]](http://lh5.ggpht.com/-4WqABgbviNQ/Uk9_qEdNv6I/AAAAAAAAe7k/zxKCC0A0y6g/clip_image00128_thumb.png?imgmax=800)

![clip_image001[30] clip_image001[30]](http://lh6.ggpht.com/-KHbglepQhpQ/Uk9_rc9hNII/AAAAAAAAe70/j_gpo_QlgWI/clip_image00130_thumb.png?imgmax=800)

![clip_image001[32] clip_image001[32]](http://lh6.ggpht.com/-pXo0Q67BICE/Uk9_sl1cs7I/AAAAAAAAe8E/khlbg7I5RZI/clip_image00132_thumb.png?imgmax=800)

![clip_image001[34] clip_image001[34]](http://lh4.ggpht.com/-X2a_mRwDFIM/Uk9_uw8pISI/AAAAAAAAe8k/wHa1-AzUX2c/clip_image00134_thumb.png?imgmax=800)

![clip_image001[36] clip_image001[36]](http://lh3.ggpht.com/-8IZoRS-baTM/Uk9_xzroLDI/AAAAAAAAe9E/IzgCGuqnmuw/clip_image00136_thumb.png?imgmax=800)

![clip_image001[38] clip_image001[38]](http://lh6.ggpht.com/-D9oJNetpnjg/Uk9_zKBmIzI/AAAAAAAAe9Q/vA5WnA4edvM/clip_image00138_thumb.png?imgmax=800)

![clip_image001[40] clip_image001[40]](http://lh5.ggpht.com/-_mOlcixEGeo/Uk9_0eJAZ6I/AAAAAAAAe9k/40DZBLVAWs0/clip_image00140_thumb.png?imgmax=800)

![clip_image001[42] clip_image001[42]](http://lh5.ggpht.com/-Ktkhh_MjrGM/Uk9_1s6rslI/AAAAAAAAe9w/2xvViGedHKU/clip_image00142_thumb.png?imgmax=800)

![clip_image001[44] clip_image001[44]](http://lh4.ggpht.com/-clYFa0FPRNI/Uk9_2juO4BI/AAAAAAAAe-A/sDmIrrfYX94/clip_image00144_thumb.png?imgmax=800)

![clip_image001[46] clip_image001[46]](http://lh5.ggpht.com/-uT7uU4YvYEM/Uk9_3oKD8RI/AAAAAAAAe-U/eSsPsDIb9dg/clip_image00146_thumb.png?imgmax=800)

![clip_image001[48] clip_image001[48]](http://lh6.ggpht.com/-Vn6rDp_K1YE/Uk9_5_673gI/AAAAAAAAe-k/WALhaEkjR0M/clip_image00148_thumb.png?imgmax=800)

![clip_image001[50] clip_image001[50]](http://lh3.ggpht.com/-QFKiGJRQXn0/Uk9_68YIkCI/AAAAAAAAe-0/vJlbhnrdP90/clip_image00150_thumb.png?imgmax=800)

![clip_image001[52] clip_image001[52]](http://lh4.ggpht.com/-OO6DkN31WuU/Uk9_8RUDUFI/AAAAAAAAe_E/4W4HpVla6kQ/clip_image00152_thumb.png?imgmax=800)

![clip_image001[54] clip_image001[54]](http://lh3.ggpht.com/-oGAcYDIjf9Y/Uk9_9lbZlUI/AAAAAAAAe_U/e2XHKP1jYRM/clip_image00154_thumb.png?imgmax=800)

![clip_image001[56] clip_image001[56]](http://lh3.ggpht.com/-Rmkprr5Wtww/Uk-AAN0HqtI/AAAAAAAAe_k/ZyIBlKPJ880/clip_image00156_thumb.png?imgmax=800)

![clip_image001[58] clip_image001[58]](http://lh3.ggpht.com/-ARHr1TqtVYs/Uk-ABQxarPI/AAAAAAAAe_w/72S0NfIDzGc/clip_image00158_thumb.png?imgmax=800)

![clip_image001[60] clip_image001[60]](http://lh3.ggpht.com/--OeuZUKuNGI/Uk-ACzVkYRI/AAAAAAAAfAA/ELO0HvErW_k/clip_image00160_thumb.png?imgmax=800)

![clip_image001[62] clip_image001[62]](http://lh6.ggpht.com/-QbHcN1-qPOs/Uk-ADzw70MI/AAAAAAAAfAM/JIgj7LPVp3U/clip_image00162_thumb.png?imgmax=800)

![clip_image001[64] clip_image001[64]](http://lh4.ggpht.com/-05aTPAG2tZs/Uk-AFdmb2GI/AAAAAAAAfAk/n7gSJgSf0ds/clip_image00164_thumb.png?imgmax=800)

![clip_image001[66] clip_image001[66]](http://lh3.ggpht.com/-KvAtfdI4NeM/Uk-AG2CUGYI/AAAAAAAAfA0/c9VZfQv19Ew/clip_image00166_thumb.png?imgmax=800)

![clip_image001[1] clip_image001[1]](http://lh5.ggpht.com/-dRMS7Nl6iKk/Uk-AJTOM59I/AAAAAAAAfBU/V9gbI5LNUWQ/clip_image001%25255B1%25255D_thumb.png?imgmax=800)

![clip_image001[3] clip_image001[3]](http://lh3.ggpht.com/-RKB5GNXoZBo/Uk-AKoWlELI/AAAAAAAAfBk/3Ij2_OKmqgQ/clip_image001%25255B3%25255D_thumb.png?imgmax=800)

![clip_image001[5] clip_image001[5]](http://lh5.ggpht.com/-_sBA4M9olU0/Uk-AL38uDpI/AAAAAAAAfB0/6fNMNtuBmws/clip_image001%25255B5%25255D_thumb.png?imgmax=800)

![clip_image001[7] clip_image001[7]](http://lh6.ggpht.com/--PCZM1z4zgY/Uk-ANLWavwI/AAAAAAAAfCA/SixDLJl805I/clip_image001%25255B7%25255D_thumb.png?imgmax=800)

![clip_image001[9] clip_image001[9]](http://lh6.ggpht.com/-e9d0tgiCCF4/Uk-AOsQMiLI/AAAAAAAAfCQ/1igj8DfqSg8/clip_image001%25255B9%25255D_thumb.png?imgmax=800)

![clip_image001[11] clip_image001[11]](http://lh6.ggpht.com/-9FRzC3M8mEU/Uk-AQJ92rLI/AAAAAAAAfCc/N33M6TY-oPM/clip_image001%25255B11%25255D_thumb.png?imgmax=800)

![clip_image001[13] clip_image001[13]](http://lh4.ggpht.com/-sXrS2NQzZ7s/Uk-ARZD_MII/AAAAAAAAfCs/DxGuwKoOEbM/clip_image001%25255B13%25255D_thumb.png?imgmax=800)

![clip_image001[15] clip_image001[15]](http://lh5.ggpht.com/-hW4tgf525hg/Uk-ASp_HcKI/AAAAAAAAfDA/DuAStOvcllQ/clip_image001%25255B15%25255D_thumb.png?imgmax=800)

![clip_image001[17] clip_image001[17]](http://lh5.ggpht.com/-4Y_Fe_YHAHE/Uk-ATtNLLUI/AAAAAAAAfDQ/fqsDGaE_4yg/clip_image001%25255B17%25255D_thumb.png?imgmax=800)

![clip_image001[21] clip_image001[21]](http://lh6.ggpht.com/-HOFO30scs7s/Uk-AUgSccII/AAAAAAAAfDk/rJy7DBlhv6A/clip_image001%25255B21%25255D_thumb.png?imgmax=800)

![clip_image001[23] clip_image001[23]](http://lh3.ggpht.com/-LVJ8qJjhJ-U/Uk-AV5ussxI/AAAAAAAAfD0/RHBAWpfX8IY/clip_image001%25255B23%25255D_thumb.png?imgmax=800)

![clip_image001[25] clip_image001[25]](http://lh6.ggpht.com/-R_9_vZIm5GM/Uk-AW4iTqII/AAAAAAAAfEE/TQajy2yP46s/clip_image001%25255B25%25255D_thumb.png?imgmax=800)

![clip_image001[27] clip_image001[27]](http://lh6.ggpht.com/-XHcF6GVQ9jI/Uk-AYFjeWNI/AAAAAAAAfEU/_U_GrO2Bz5k/clip_image001%25255B27%25255D_thumb.png?imgmax=800)

![clip_image001[29] clip_image001[29]](http://lh5.ggpht.com/-sixFEtx4Dvw/Uk-AZbGY_9I/AAAAAAAAfEk/YYb3bkT3-as/clip_image001%25255B29%25255D_thumb.png?imgmax=800)

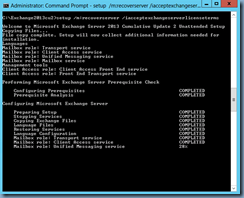

![clip_image002[4] clip_image002[4]](http://lh5.ggpht.com/-wvoNUR6lFz0/UmruWFaelUI/AAAAAAAAfsw/5iQIxh1S_4A/clip_image002%25255B4%25255D_thumb.jpg?imgmax=800)

![clip_image002[6] clip_image002[6]](http://lh4.ggpht.com/-WLYFPEyJRr0/UmruY7PiinI/AAAAAAAAftA/pomSI54CnQA/clip_image002%25255B6%25255D_thumb.jpg?imgmax=800)

![clip_image001[4] clip_image001[4]](http://lh4.ggpht.com/-epj-ILlIeK8/UnVDj5IXvmI/AAAAAAAAf0Q/e1A4YD43vg4/clip_image0014_thumb1.png?imgmax=800)

![clip_image001[6] clip_image001[6]](http://lh4.ggpht.com/-Qe30dePqou0/UnVDlgg0o2I/AAAAAAAAf0g/qvuWIOq4lI8/clip_image0016_thumb1.png?imgmax=800)